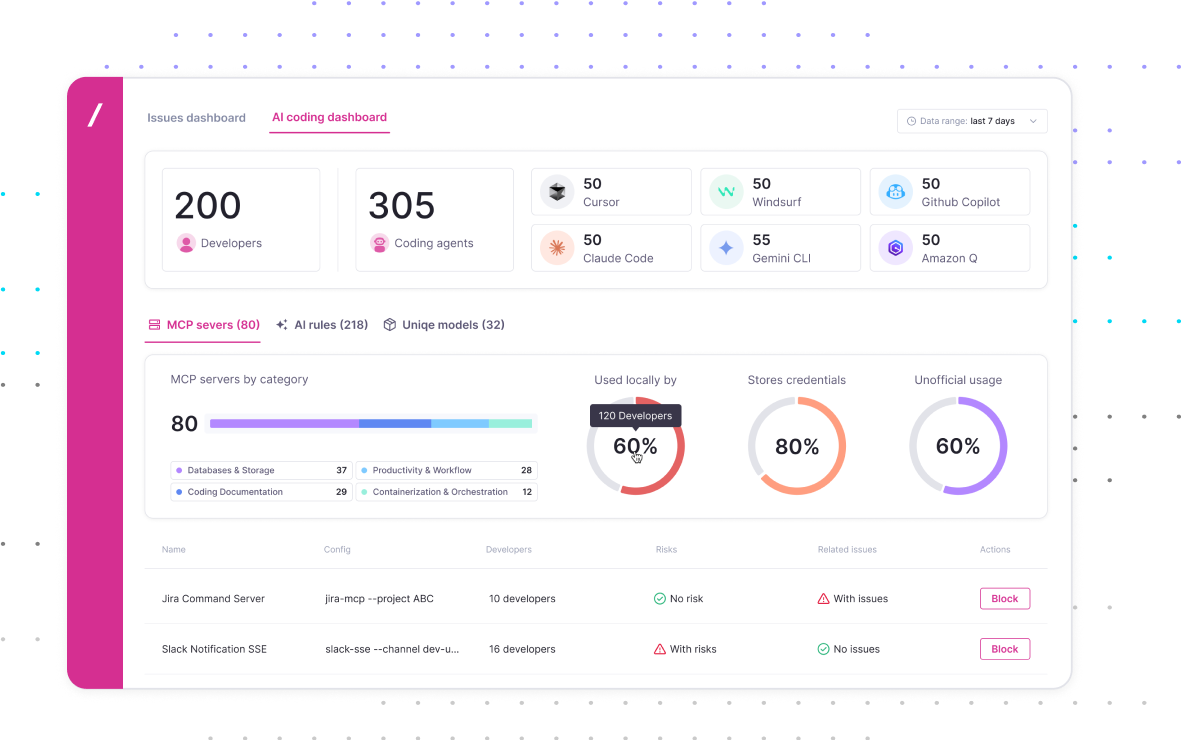

Vibe Coding Dashboard

See where AI coding agents, AI models, MCP servers, and prompt rules are used across your developer infrastructure – and get immediate assessment of their security posture.

Know where your developers are using Cursor, Windsurf or Claude Code. Get continuous visibility into the LLMs, MCP servers, and prompt rules being used.

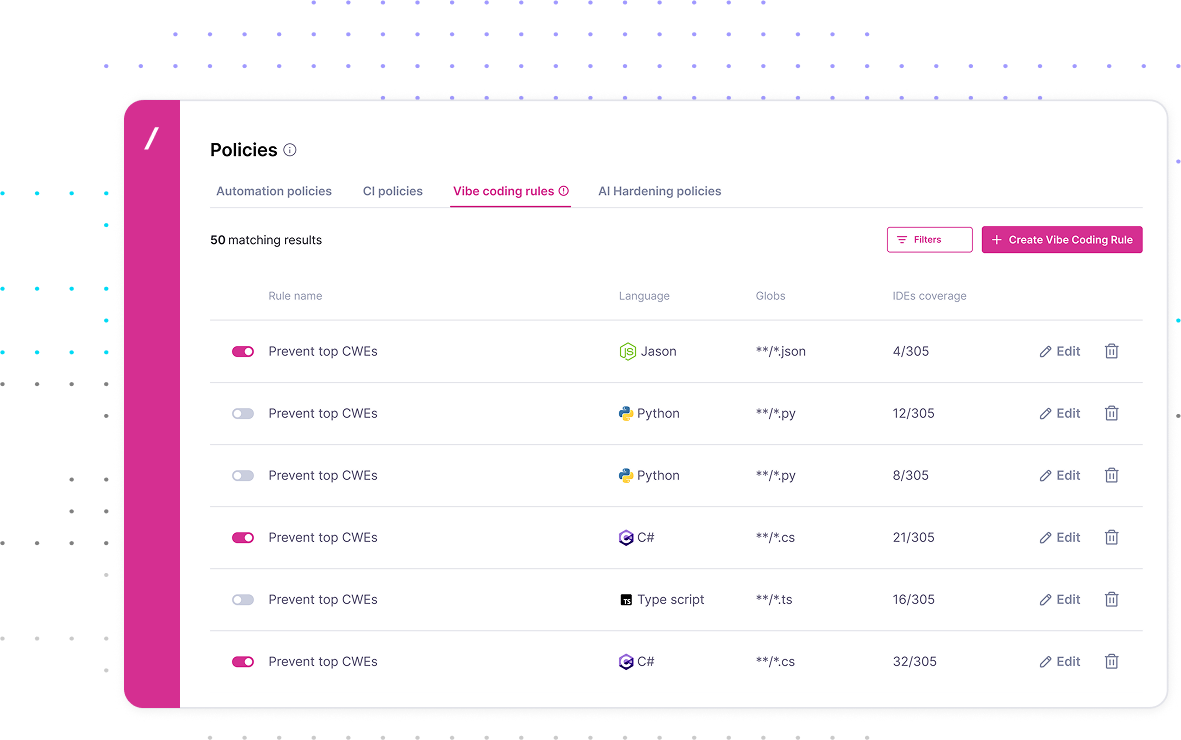

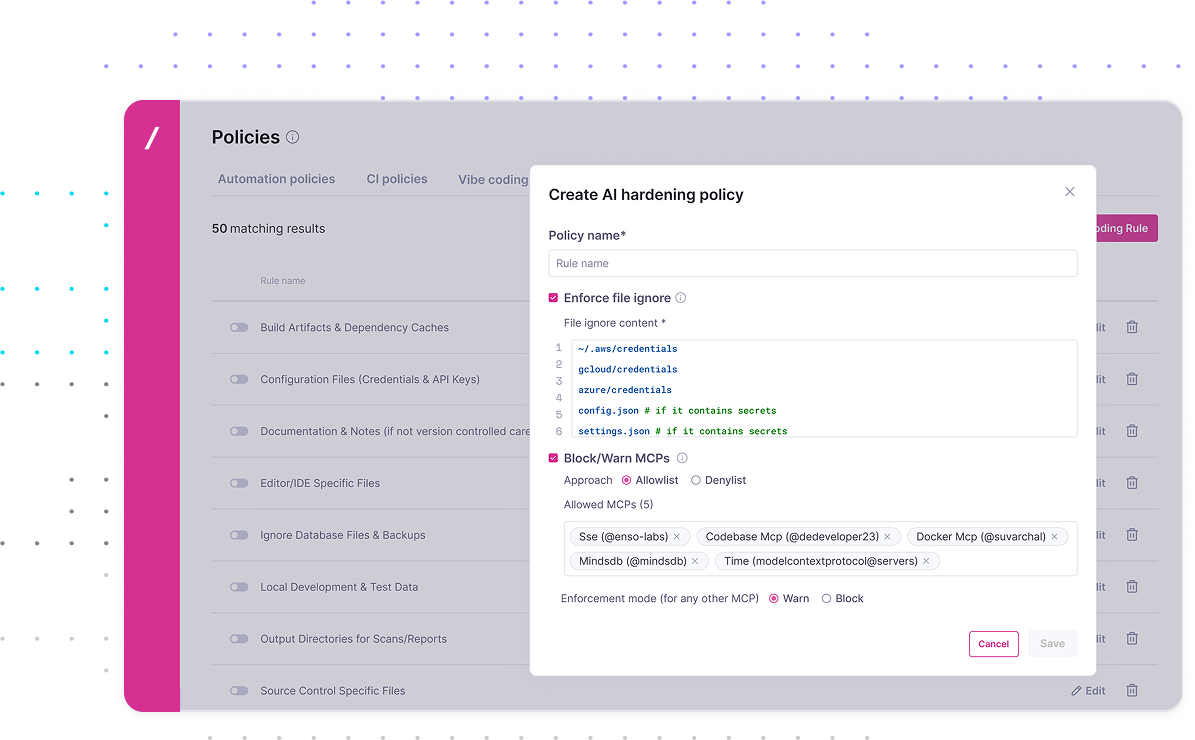

Enable security teams to create guardrails for the use of LLMs, MCPs and agentic AI coders - and preempt vulnerable code with centralized AI prompt rules.

"Pre-mediate” vulnerabilities and weaknesses in software by ensuring that AI prompts automatically include expert security guidance, generating code that’s secure from the start.

Break the scan-fix cycle and free your developers to code

with transparent, preemptive security from Backslash

See where AI coding agents, AI models, MCP servers, and prompt rules are used across your developer infrastructure – and get immediate assessment of their security posture.

Preemptively create secure code using prompt rules to enhance developer prompts so they adhere to security best practices – resulting in secure code that’s free of vulnerabilities and exposures.

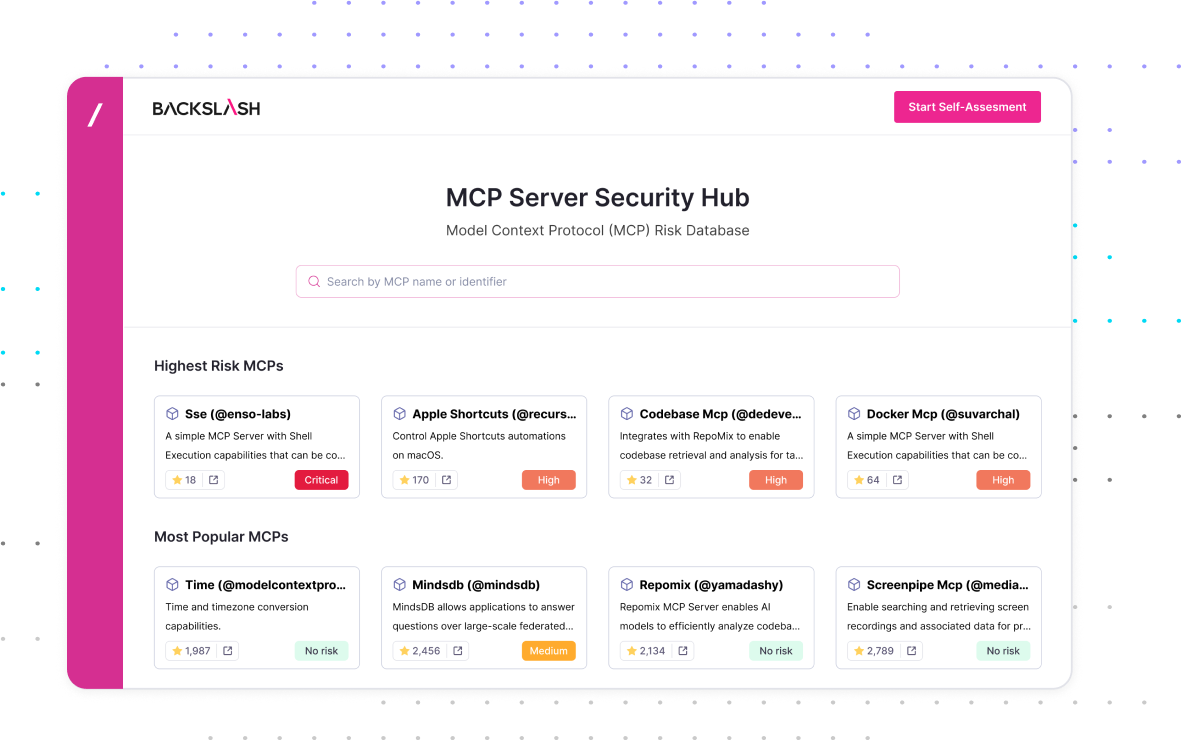

Analyze and vet MCP servers before using them to extend LLMs, in order to prevent excessive permissions and insecure configuration that leave MCPs vulnerable to malicious actors.

Monitor and enforce secure configuration, file access and permissions in your IDEs to fence off agentic AI, reduce their attack surface, and prevent unexpected and malicious behaviors.

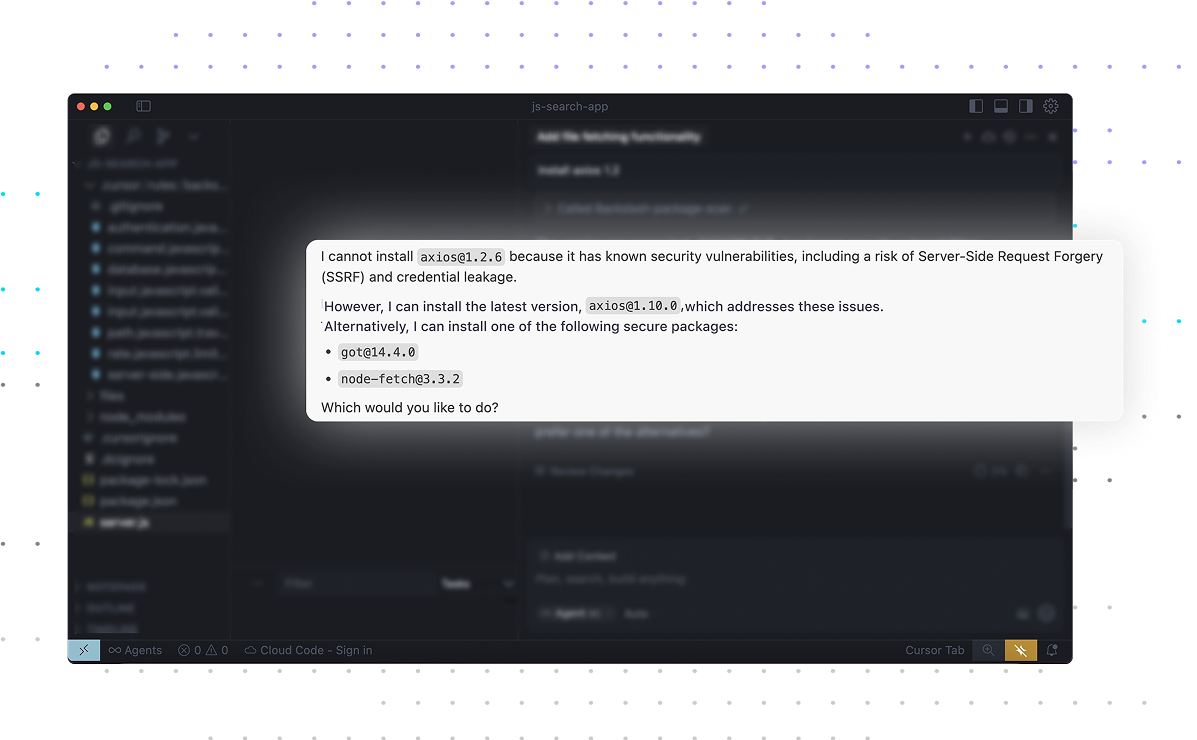

The Backslash MCP Server acts as an AI security assistant, extending LLMs by providing real-time OSS vulnerability insights during code generation and interactively guiding developers on remediation steps, package upgrades, and more.